Microsoft Fabric is Microsoft’s effort to consolidate separate but related functions of data engineer, data science and business intelligence into a single cohesive platform. It simplifies building data pipelines, deploying data lakes or warehouses and data governance by placing all of the pieces in the same location and integrating them.

We’ll explain what Fabric is, the One Lake architecture, and if it’s right for your organization.

Table of Contents

What is Microsoft Fabric?

Microsoft Fabric is a new unified analytics platform that brings together all the data and analytics tools that organizations need. It integrates technologies like Azure Data Factory, Azure Synapse Analytics, and Power BI into a single unified product.

In a typically IT and data engineering environment business intelligence tools such as Power BI were separate from the products they were connecting to, hosted on Azure. This built a silo around data engineering and the people converting the transformed data into insights which caused a number of problems that Fabric aims to help.

- Improved performance and scalability – Fabric workloads auto-scale based on the workload and product tier.

- Enhanced security and governance – Out of the box integration with Active Directory / Entra ID and Microsoft Purview

- Integration with other data sources and applications – Fabric seamlessly integrates with Power BI by automatically generating Power BI Semantic Models and making data sources easily discoverable in One Lake Hub.

Let’s take a look at how Microsoft Fabric is put together behind the scenes to gain a better appreciation of how ambitions of a product this is for Microsoft.

The Microsoft Fabric Architecture

The Microsoft Fabric Architecture is a data lake based on delta-parquet stored in Azure Blob Storage. This keeps data storage costs low while maintaining scalable performance by separating the computer layer from the storage layer.

Fabric takes the separation of the compute and storage layer a step further than what is typically seen with Google Big Query or Snowflake by offering a number of different products and services that connect to the data stored in OneLake.

In Fabric, the compute layer could be a Data Warehouse, a Lake House, Data Science Spark Notebooks, Power BI Reports, Real Time Notifications with Data Activator and more.

The image below shows the overall architecture where OneLake is a data lake at the center of a number of products that are available to deploy within a single product platform.

Regardless of which Fabric products your organization deploys, the core of it will be the data storage layer, called OneLake.

What is Microsoft OneLake?

Think of Microsoft OneLake as your organization’s OneDrive, but at a much larger scale. OneLake is designed specifically to handle large amounts of data using modern big data best practices and file formats. It efficiently stores large volumes of data that can be accessed through Microsoft Fabric data warehouses, lakehouses, or notebooks.

OneLake essentially allows businesses to ingest data once, and re-use it many different times all while keeping it stored in one low maintenance, cost effective, single source of data regardless of which department is utilizing the data downstream.

The following video also does a great overview of explaining some of the benefits and features coming with Microsoft Fabric and OneLake

Advantages of Microsoft OneLake

- Centralized Management – Like having your personal files neatly organized in OneDrive, OneLake streamlines data management across the board. It offers a single, unified platform that simplifies tracking, securing, and governing your data.

- Optimized Performance – While OneDrive facilitates easy access to your files, OneLake takes a leap forward. It significantly improves data analysis performance with its centralized data store, further amplified by a suite of optimization techniques.

- Enhanced Security – Your files are safe in OneDrive, but with OneLake, your data’s security reaches a new zenith. It offers robust encryption, stringent access control, and detailed auditing.

- Scalability – Your data needs grow with your organization. Hence, OneLake, much like OneDrive, ensures scalability to meet the demands of organizations of all sizes.

Microsoft OneLake File Explorer

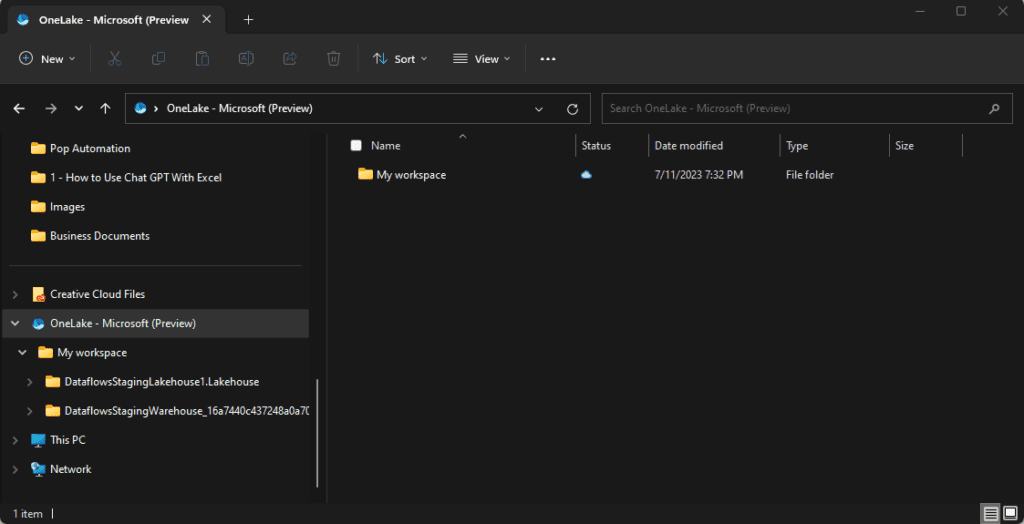

OneLake integrates with Windows Explorer and looks the same as OneDrive does when it’s installed on a PC. The difference is that when you drag and drop files into a OneLake folder they are stored in a data lake that can be ingested by a number of different services that sit in the Microsoft Fabric compute layer.

A drag and drop solution for adding data to OneLake eliminates the need to upload files to SharePoint, OneDrive, create gateway connections, or even build pipelines. It empowers users to quickly add data to your data lake which can be utilized in a range of services.

If you’d like to give it a try, you can download OneLake File Explorer can currently be downloaded from Microsoft.

Is Microsoft Fabric Right for You?

The best and worst part of Microsoft Fabric is how integrated and low maintenance it is. For small and mid-sized organizations that do not have a lot of data engineering resources, the ability to deploy a data lake within a few minutes, ingest data and make it available for reporting is very impressive. Microsoft automates most of the behind the scenes work that data engineering and IT groups would typically spend a lot of time doing making it a turn-key solution.

The trade-off for ease of use is the lack of control. With so much of Fabric being automated and controlled by Microsoft, they’re asking you to put a lot of faith and trust into how they optimize the solution and how much compute power is made available at each pricing tier. There are some reporting tools available, but you don’t gain the ability to adjust and fine tune your deployment which can be a big turn off for large scale enterprises.

Fabric also feels at times like a work in progress. Features that you think should exist, may not be available. Microsoft is aggressively releasing and updating Fabric on a monthly basis to quickly close the gap with more mature data lake solutions like Databricks which can also be a turn off for IT and data engineering teams that already have the in house experience required to manage such a solution.